Abstract:

ZFS was created by Sun Microsystems to innovate the storage subsystem of computing systems by simultaneously expanding capacity & security exponentially while collapsing the formerly striated layers of storage (i.e. volume managers, file systems, RAID, etc.) into a single layer in order to deliver capabilities that would normally be very complex to achieve. One such innovation introduced in ZFS was the ability to provide inexpensive limited life solid state storage (FLASH media) which may offer fast (or at least greater deterministic) random read or write access to the storage hierarchy in a place where it can enhance performance of less deterministic rotating media. This paper discusses the process of upgrading attached external mirrored storage to external network attached ZFS storage.

Case Study:

A particular Media Design House had formerly used multiple external mirrored storage on desktops as well as racks of archived optical media in order to meet their storage requirements. A pair of (formerly high-end) 400 Gigabyte Firewire drives lost a drive. An additional pair of (formerly high-end) 500 Gigabyte Firewire drives experienced a drive loss within one month later. A media wall of CD's and DVD's was getting cumbersome to retain.

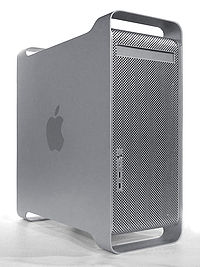

The goal was to consolidate the mirrored sets of current data, recent data, and long-term old data onto a single set of mirrored media. The target machine the business was most concerned about was a high-end 64bit dual 2.5GHz PowerMAC G5 deskside server running MacOSX.

The introduction of mirrored external higher capacity media (1.5 TB disks with eSata, Firewire, and USB 2.0 options) proved to be far too problematic. These drives were just released and proved unfortunately buggy. During improper shutdowns or proper shutdowns where the media did not properly flush the final writes from cache in time resulted in horrible delays lasting over a day. Rebuilding the mirrored set upon next startup would take over a day, where access time to that media was tremendously degraded during a rebuild process.

Moving a 1.5TB drives to external USB storage connector on a new top-of-the-line Linksys WRT610N Dual-Band N Router with Gigabit Ethernet and Storage Link proved impossible. The thought is that the business would copy the data manually from the desktop to the network storage nightly, by hand, over the gigabit ethernet. Unfortunately, the embedded Linux file system did not support USB drives of this size. The embedded Linux int he WRT610N system also did not support mirroring or SNMP for remote management.

The decision was to hold-off any final decision until the next release of MacOSX was released, where a real enterprise grade file system would be added to MacOSX - ZFS.

With the withdrawal of ZFS from the next Apple operating system, the decision was made to migrate the all the storage from the Media Design House onto a single deskside ZFS server, which could handle the company's storage requirements. Solaris 10 was the selected, since it offered a stable version of ZFS under a nearly Open Source operating system, without being on the bleeding-edge as OpenSolaris was. If there was ever the decision to change the licensing with Solaris 10, it was understood that OpenSolaris could be leveraged, so long term data storage was safe.

Selected Hardware:

Two Seagate FreeAgent XTreme external drives were selected for storage. A variety of interfaces were supported, including eSATA, Firewire 400, and USB 2.0 At the time, this was the highest capacity external disk which could be purchased with the widest variety of high-capacity storage interfaces off-the-shelf at local computer retailers. 2 Terabyte drives were expected to be released in the next 9 months, so it was important the system would be able to accept them without bios or other file system size limitations. These were considered "green" drives, meaning that they would spin down when not in use, to conserve energy.

A dual 450MHz deskside Sun Ultra60 Creator 3D with 2 Gigabytes of RAM was chosen for the solution. They were well build machines with a current low price-point which could run current releases of Solaris 10 with modern ZFS filesystem. Dual 5 port USB PCI cards were selected (as the last choice, after eSATA and Firewire cards proved incompatible with the Seagate external drives... more on this choice, later.) Solaris offered security with stability, since few viruses and worms target this enterprise and managed services grade platform, and a superior file system to any other platform on the market at the time (as well as today): ZFS. SPARC offered long term equipment supportability since 64 bit was supported for a decade, while consumer grade Intel and AMD CPU's were still struggling to get off of 32 bit.

The Apple laptops and Deskside Server all supported Gigabit Ethernet and 802.11N. Older Apple systems supported 100 megabit Ethernet and 802.11G. A 1 Gigabit Ethernet card for the Sun Ultra60 was purchased, in addition to several Gigabit Ethernet Switches for the office. A newly released Linksys dual-band Wireless N router with 4xGigabit Ethernet ports was also purchased, the first of a new generation of wireless router in the consumer market. This new wireless router would offer simultaneous access to network resources over full-speed 2.4GHz 802.11G and 5GHz 802.11 N wireless systems. The Gigabit ethernet switches were also considered "green" switches, where power was greatly conserved when ports were not in use.

CyberPower UPS's were chosen for the solution for all aspects of the solution, from disk to Sun server, to switches, to wireless access point. These UPS's were considered "green" UPS's, where their power consumption was far less than competing UPS's, plus the displays clearly showed information regarding load, battery capacity, input voltage, output voltage, and component run time.

Speed Bumps:

The 64 bit PCI bus in the Apple Deskside Server and the Sun Deskside Workstation proved notoriously difficult to acquire eSATA cards, which would work reliably. The drives worked independently under FireWire, but two drives would not work reliably on the same machine with FireWire. A pair of FireWire cards was also purchased, in order to move the drives to independent controllers, but this did not work under either MacOSX or Solaris platforms with these external Seagate drives. The movement to USB 2.0 was a last ditch effort. Under MacOSX, rebuild times ran more than 24 hours, which drove the decision to move to Solaris with ZFS. Two 5 port USB 2.0 cards were selected, one for each drive, with enough extra ports to add more storage for the next 4 years. The USB 2.0 cards had a firmware bug, which required a patch to Solaris 10, in order to make the cards operate at full USB 2.0 speed.

Implementation:

A mirror of the two 1.5 Terabyte drives was created and the storage was shared from ZFS with a couple of simple commands.

The configuration is as shown below.

Ultra60/user# zpool status pool: zpool2 state: ONLINE config: NAME STATE READ WRITE CKSUM zpool2 ONLINE 0 0 0 mirror ONLINE 0 0 0 c4t0d0s0 ONLINE 0 0 0 c5t0d0s0 ONLINE 0 0 0 errors: No known data errors Ultra60/user# zfs get sharenfs zpool2 NAME PROPERTY VALUE SOURCE zpool2 sharenfs on local

Implementation Results:

Various tests were conducted, such as:

- Pulling the power out of a USB disk during read and write operations

- Pulling the USB cord out of a USB disk during read and write operations

- Pulling the power out of the SPARC Workstation during read and write operations

Even though the SPARC CPU system was vastly slower, in raw CPU clock speed, from the POWER CPU in the Apple deskside unit, the overall performance of the storage area network was vastly superior to the former desktop mirroring attempt using the high-capacity storage.

Copying the data across the ethernet network experienced some short delays, during the time the disks needed to spin up from sleep mode. With future versions of ZFS projecting to support both Level 2 ARC for reads and Intent Logging for writes, the performance was considered more than acceptable until Solaris 10 received sufficient upgrades in the future.

The system was implemented and accepted within the Media Design House. The process of moving old desktop mirrors and racks of CD and DVD media to Solaris ZFS storage began.

No comments:

Post a Comment